This is an original DRAFT (12/2005) of a book chapter

edited and published in…

Manual of Geographic Information

Systems,

edited

by Marguerite Madden, 2009

American Society for Photogrammetry,

Bethesda, Maryland, USA

Section 5, Chapter 29, pages 527-585; ISBN

1-57083-086-X

|

|

This book chapter is

based on selected Beyond Mapping

columns by Joseph K. Berry published in GeoWorld magazine from 1996 through 2007. It is intended

to be used as a self-instructional text or in support of formal academic

courses for study of grid-based map analysis and |

Click here for a

printer-friendly version (.pdf)

___________________________

Joseph K. Berry, W.M.

Keck Visiting Scholar in Geosciences

Geography Department,

University of Denver

2050 East Iliff Avenue, Denver, Colorado 80208-0183

Email jkberry@du.edu; Phone 970-215-0825

Table of Contents

(with

Hyperlinks)

________________________________

GIS Modeling and

Analysis

Joseph K. Berry

W.M. Keck Visiting Scholar in Geosciences

Geography Department, University of Denver, 2050 East Iliff Avenue

Denver, Colorado

80208-0183

Email jkberry@du.edu; Phone 970-215-0825

1.0 Introduction

Although

The early 1970s saw Computer Mapping automate the cartographic process. The points, lines and areas defining geographic

features on a map were represented as organized sets of X,Y

coordinates. In turn these data form

input to a pen plotter that can rapidly update and redraw the connections at a

variety of scales and projections. The

map image, itself, is the focus of this processing.

The early 1980s exploited the change in the format and the computer environment

of mapped data. Spatial Database Management Systems were developed that link

computer mapping techniques to traditional database capabilities. The demand for spatially and thematically

linked data focused attention on data issues.

The result was an integrated processing environment addressing a wide

variety of mapped data and digital map products.

During the 1990s a resurgence of attention

was focused on analytical operations and a comprehensive theory of spatial

analysis began to emerge. This

"map-ematical" processing involves spatial

statistics and spatial analysis. Spatial statistics has been used by

geophysicists and climatologists since the 1950s to characterize the geographic

distribution, or pattern, of mapped data.

The statistics describe the spatial variation in the data, rather than

assuming a typical response occurs everywhere within a project area.

Spatial analysis, on the other hand,

expresses a geographic relationships as a series of

map analysis steps, leading to a solution map in a manner analogous to basic

algebra. Most of the traditional

mathematical capabilities, plus an extensive set of advanced map analysis

operations, are available in contemporary

Several sections and chapters in this manual

address specific analytical and statistical techniques in more detail, as well

as comprehensively describing additional modeling applications. In addition, the online book, Map

Analysis: Procedures and Applications in

1.1 Mapping to Analysis of

Mapped Data

The

evolution (or is it a revolution?) of

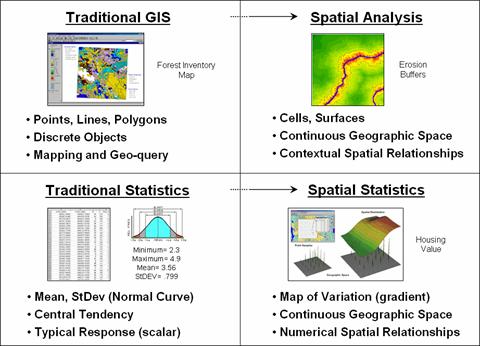

Figure

1.1-1 identifies two key trends in the movement from mapping to map

analysis. Traditional

Figure 1.1-1. Spatial Analysis and

Spatial Statistics are extensions of traditional ways of analyzing mapped data.

Spatial Analysis extends the basic set of discrete map

features of points, lines and polygons to map “surfaces” that represent

continuous geographic space as a set of contiguous grid cell values. The consistency of this grid-based

structuring provides the foothold for a wealth of new analytical tools for

characterizing “contextual spatial relationships,” such as identifying the

visual exposure of an entire road network.

In

addition, it provides a mathematical/statistical framework by numerically

representing geographic space. Traditional Statistics is inherently

non-spatial as it seeks to represent a data set by its typical response

regardless of spatial patterns. The

mean, standard deviation and other statistics are computed to describe the

central tendency of the data in abstract numerical space without regard to the

relative positioning of the data in real-world geographic space.

Spatial Statistics, on the other hand, extends

traditional statistics on two fronts.

First, it seeks to map the variation in a data set to show where unusual

responses occur, instead of focusing on a single typical response. Secondly, it can uncover “numerical spatial

relationships” within and among mapped data layers, such as generating a

prediction map identifying where likely customers are within a city based on

existing sales and demographic information.

1.2 Vector-based Mapping versus

Grid-based Analysis

The

close conceptual link of vector-based desktop mapping to manual mapping and

traditional database management has fueled its rapid adoption. In many ways, a database is just picture

waiting to happen. The direct link

between attributes described as database records and their spatial

characterization is easy to conceptualize.

Geo-query enables clicking on a map to pop-up the attribute record for a

location or searching a database then plotting all of the records meeting the

query. Increasing data availability and

Internet access, coupled with decreasing desktop mapping system costs and

complexity, make the adoption of spatial database technology a practical reality.

Maps

in their traditional form of points, lines and polygons identifying discrete

spatial objects align with manual mapping concepts and experiences. Grid-based maps, on the other hand, represent

a different paradigm of geographic space that opens entirely new ways to address

complex issues. Whereas traditional vector

maps emphasize ‘precise placement of physical features,’ grid maps seek to

‘analytically characterize continuous geographic space in both real and

cognitive terms.’

2.0 Fundamental

Map Analysis Approaches

The tools for

mapping database attributes can be extended to analysis of spatial

relationships within and among mapped data layers. Two broad classes of capabilities form this

extension—spatial statistics and spatial analysis.

2.1 Spatial Statistics

Spatial

statistics can be grouped into two broad camps—surface modeling and spatial

data mining. Surface modeling involves the translation of discrete point data

into a continuous surface that represents the geographic distribution of the

data. Traditional non-spatial statistics

involves an analogous process when a numerical distribution (e.g., standard

normal curve) is used to generalize the central tendency of a data set. The derived average and standard deviation

reflects the typical response and provides a measure of how typical it is. This characterization seeks to explain data

variation in terms of the numerical distribution of measurements without

reference to the data’s geographic distribution and patterns.

In

fact, an underlying assumption in most traditional statistical analyses is that

the data is randomly or uniformly distributed in geographic space. If the data exhibits a geographic pattern

(termed spatial autocorrelation) many of the non-spatial analysis techniques

are less valid. Spatial statistics, on

the other hand, utilizes inherent geographic patterns to further explain the

variation in a set of sample data.

There

are numerous techniques for characterizing the spatial distribution inherent in

a data set but they can be categorized into four basic approaches:

- Point Density mapping that aggregates the number of

points within a specified distance (number per acre),

- Spatial Interpolation that weight-averages measurements

within a localized area (e.g., Kriging), and

- Map Generalization that fits a functional form to the

entire data set (e.g., polynomial surface fitting).

- Geometric Facets that

construct a map surface by tessellation

(e.g., fitting a Triangular Irregular Network of facets to the sample data).

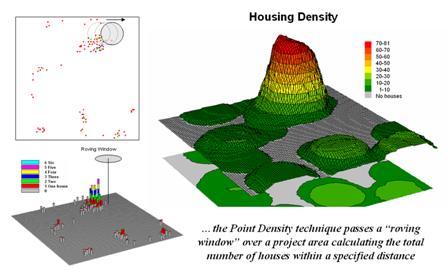

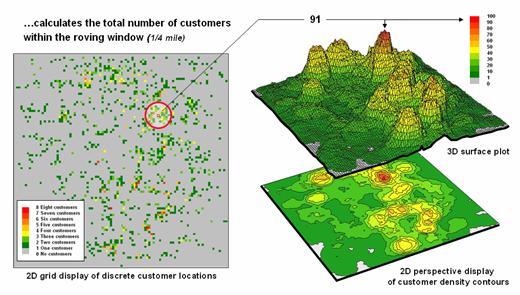

Figure

2.1-1. Calculating the total number of

houses within a specified distance of each map location generates a housing

density surface.

For

example, consider figure 2.1-1 showing a point density map derived from a map

identifying housing locations. The

project area is divided into an analysis frame of 30-meter grid cells (100 columns

x 100 rows = 10,000 grid cells). The

number of houses for each grid space is identified in left portion of the

figure as colored dots in the 2D map and “spikes” in the 3D map.

A

neighborhood summary operation is used to pass a “roving window” over the

project area calculating the total number of houses within a quarter-mile of

each map location. The result is a

continuous map surface indicating the relative density of houses—‘peaks’ where

there is a lot of nearby houses and ‘valleys’ where there are few or none. In essence, the map surface quantifies what

your eye sees in the spiked map—some locations with lots of houses and others

with very few.

While

surface modeling is used to derive continuous surfaces, spatial data mining seeks to uncover numerical relationships within

and among mapped data. Some of the

techniques include coincidence summary, proximal alignment, statistical tests,

percent difference, surface configuration, level-slicing, and clustering that

is used in comparing maps and assessing similarities in data patterns.

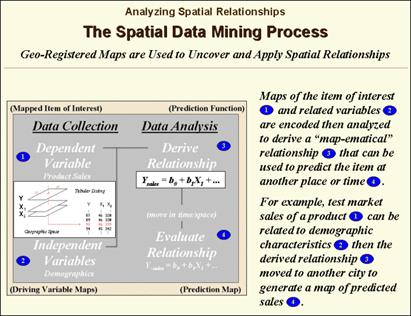

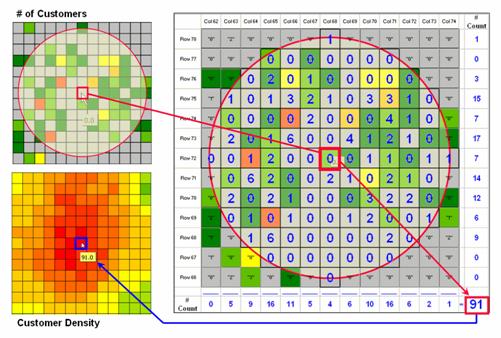

Another group of spatial data mining

techniques focuses on developing predictive models. For example, one of the earliest uses of

predictive modeling was in extending a test market project for a phone company

(figure 2.1-2). Customers’ address were

used to “geo-code” map coordinates for sales of a new product enabling

distinctly different rings to be assigned to a single phone line—one for the

kids and one for the parents. Like

pushpins on a map, the pattern of sales throughout the test market area emerged

with some areas doing very well, while other areas sales were few and far

between.

Figure 2.1-2. Spatial Data Mining

techniques can be used to derive predictive models of the relationships among

mapped data.

The demographic data for the city was

analyzed to calculate a prediction equation between product sales and census

block data. The prediction equation

derived from the test market sales was applied to another city by evaluating

exiting demographics to “solve the equation” for a predicted sales map. In turn the predicted map was combined with a

wire-exchange map to identify switching facilities that required upgrading

before release of the product in the new city.

2.2 Spatial Analysis

Whereas spatial data mining responds to

‘numerical’ relationships in mapped data, spatial

analysis investigates the ‘contextual’ relationships. Tools such as slope/aspect, buffers,

effective proximity, optimal path, visual exposure and shape analysis, fall

into this class of spatial operators.

Rather than statistical analysis of mapped data, these techniques

examine geographic patterns, vicinity characteristics and connectivity among

features.

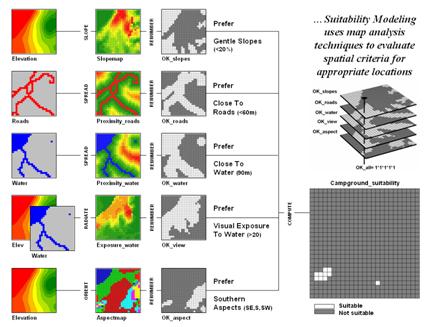

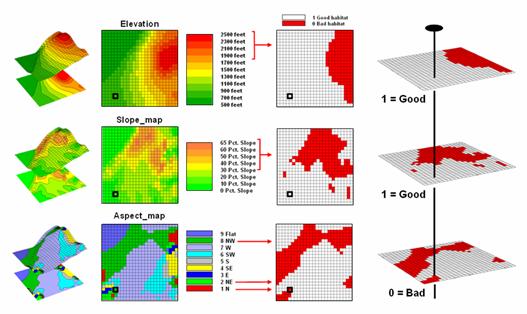

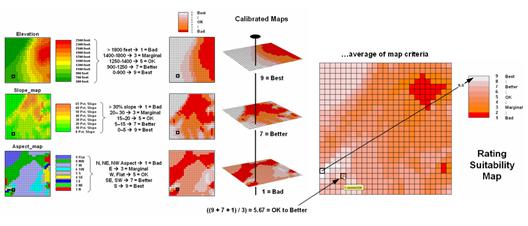

One of the most frequently used map analysis

techniques is Suitability Modeling. These applications seek to map the relative

appropriateness of map locations for particular uses. For example, a map of the best locations for

a campground might be modeled by preferences for being on gentle slopes, near

roads, near water, good views of water and a southerly aspect.

These spatial criteria can be organized into

a flowchart of processing (see figure 2.2-1) where boxes identify maps and

lines identify map analysis operations.

Note that the rows of the flowchart identify decision criteria with

boxes representing maps and lines representing map analysis operations. For example, the top row evaluates the

preference to locate the campground on gentle slopes.

Figure 2.2-1. Map Analysis

techniques can be used to identify suitable places for a management activity.

The first step calculates a slope map from

the base map of elevation by entering the command—

SLOPE Elevation Fitted FOR Slopemap

The derived slope values are then

reclassified to identify locations of acceptable slopes by assigning 1 to

slopes from 0 to 20 percent—

RENUMBER Slopemap

ASSIGNING 1 TO 0 THRU 20

ASSIGNING 0 TO 20 THRU 1000 FOR OK_slope

—and assigning 0 to unacceptably steep slopes

that are greater than 20 percent.

In a similar manner, the other criteria are

evaluated and represented by maps with a value of 1 assigned to acceptable

areas (white) and 0 assigned to unacceptable areas (dark grey). The individual preference maps are combined

by entering the command—

CALCULATE OK_slope

* OK_road * OK_water * OK_view * OK_aspect

FOR Campground_suitability

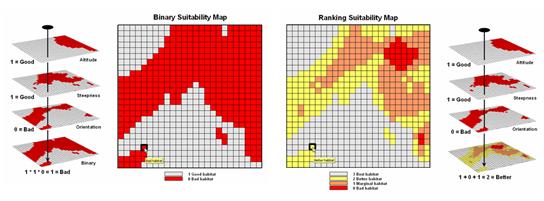

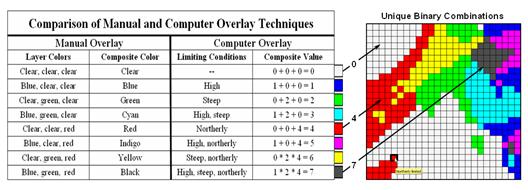

The map analysis procedure depicted in figure

2.2-1 simply substitutes values of 1 and 0 for suitable and non-suitable

areas. The multiplication of the digital

preference maps simulates stacking manual map overlays on a light-table. A location that computes to 1 (1*1*1*1*1= 1)

corresponds to acceptable. Any numeric

pattern with the value 0 will result in a product of 0 indicating an

unacceptable area.

While some map analysis techniques are rooted

in manual map processing, most are departures from traditional procedures. For example, the calculation of slope is much

more exacting than ocular estimates of contour line spacing. And the calculation of visual exposure to

water presents a completely new and useful concept in natural resources

planning. Other procedures, such as

optimal path routing, landscape fragmentation indices and variable-width

buffers, offer a valuable new toolbox of analytical capabilities.

3.0 Data

Structure Implications

Points,

lines and polygons have long been used to depict map features. With the stroke of a pen a cartographer could

outline a continent, delineate a road or identify a specific building’s

location. With the advent of the

computer, manual drafting of these data has been replaced by stored coordinates

and the cold steel of the plotter.

In

digital form these spatial data have been linked to attribute tables that

describe characteristics and conditions of the map features. Desktop mapping exploits this linkage to

provide tremendously useful database management procedures, such as address

matching and driving directions from you house to a store along an organized

set of city street line segments. Vector-based data forms the foundation

of these processing techniques and directly builds on our historical

perspective of maps and map analysis.

Grid-based data, on the other hand, is a

relatively new way to describe geographic space and its relationships. At the heart of this digital format is a new

map feature that extends traditional points, lines and polygons (discrete

objects) to continuous map surfaces.

The

rolling hills and valleys in our everyday world is a good example of a

geographic surface. The elevation values

constantly change as you move from one place to another forming a continuous

spatial gradient. The left-side of

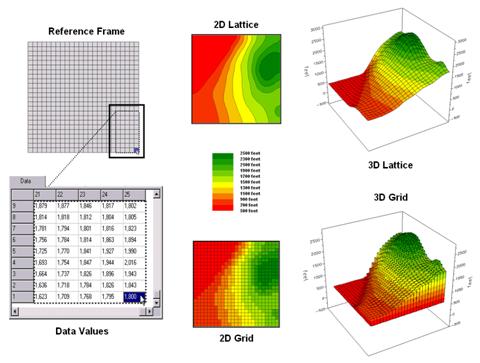

figure 3.0-1 shows the grid data structure and a sub-set of values used to

depict the terrain surface shown on the right-side. Note that the shaded zones ignore the subtle

elevation differences within the contour polygons (vector representation).

Figure 3.0-1. Grid-based data can be displayed in 2D/3D

lattice or grid forms.

Grid

data are stored as a continuous organized set of values in a matrix that is geo-registered

over the terrain. Each grid cell

identifies a specific location and contains a map value representing its

average elevation. For example, the grid

cell in the lower-right corner of the map is 1800 feet above sea level (falling

within the 1700 to 1900 contour interval).

The relative heights of surrounding elevation values characterize the

subtle changes of the undulating terrain of the area.

3.1 Grid Data Organization

Map

features in a vector-based mapping system identify discrete, irregular spatial

objects with sharp abrupt boundaries.

Other data types—raster images and raster grids—treat space in entirely

different manner forming a spatially continuous data structure.

For

example, a raster image is composed

of thousands of ‘pixels’ (picture elements) that are analogous to the dots on a

computer screen. In a geo-registered

B&W aerial photo, the dots are assigned a grayscale color from black (no

reflected light) to white (lots of reflected light). The eye interprets the patterns of gray as

forming the forests, fields, buildings and roads of the actual landscape. While raster maps contain tremendous amounts

of information that are easily “seen” and computer classified using remote

sensing software, the data values reference color codes reflecting

electromagnetic response that are too limited to support the full suite of map

analysis operations involving relationships within and among maps.

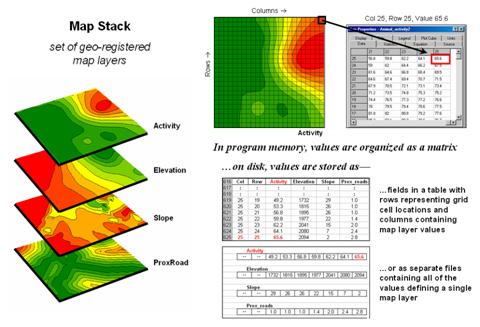

Figure 3.1-1. A map stack of individual grid layers can be

stored as separate files or in a multi-grid table.

Raster grids, on the other hand, contain a robust

range of values and organizational structure amenable to map analysis and

modeling. As depicted on the left side

of figure 3.1-1, this organization enables the computer to identify any or all

of the data for a particular location by simply accessing the values for a

given column/row position (spatial coincidence used in point-by-point overlay

operations). Similarly, the immediate or

extended neighborhood around a point can be readily accessed by selecting the

values at neighboring column/row positions (zonal groupings used in region-wide

overlay operations). The relative

proximity of one location to any other location is calculated by considering

the respective column/row positions of two or more locations (proximal

relationships used in distance and connectivity operations).

There

are two fundamental approaches in storing grid-based data—individual “flat”

files and “multiple-grid” tables (right side of figure 3.1-1). Flat files store map values as one long list,

most often starting with the upper-left cell, then sequenced left to right

along rows ordered from top to bottom.

Multi-grid tables have a similar ordering of values but contain the data

for many maps as separate field in a single table.

Generally

speaking the flat file organization is best for applications that create and

delete a lot of maps during processing as table maintenance can affect

performance. However, a multi-gird table

structure has inherent efficiencies useful in relatively non-dynamic

applications. In either case, the

implicit ordering of the grid cells over continuous geographic space provides

the topological structure required for advanced map analysis.

3.2 Grid Data Types

Understanding

that a digital map is first and foremost an organized set of numbers is

fundamental to analyzing mapped data.

The location of map features are translated into computer form as

organized sets of X,Y coordinates (vector) or grid

cells (raster). Considerable attention

is given data structure considerations and their relative advantages in storage

efficiency and system performance.

However

this geo-centric view rarely explains the full nature of digital maps. For example, consider the numbers themselves

that comprise the X,Y coordinates—how does number type

and size effect precision? A general

feel for the precision ingrained in a “single precision floating point”

representation of Latitude/Longitude in decimal degrees is—

1.31477E+08 ft = equatorial

circumference of the earth

1.31477E+08 ft / 360 degrees = 365214

ft per degree of Longitude

A

single precision number carries six decimal places, so—

365214

ft/degree * 0.000001= .365214 ft *12 = 4.38257 inch precision

In

analyzing mapped data however, the characteristics of the attribute values are

just as critical as precision in positioning.

While textual descriptions can be stored with map features they can only

be used in geo-query. Figure 3.2-1 lists

the data types by two important categories—numeric and geographic. Nominal

numbers do not imply ordering. A value

of 3 isn’t bigger, tastier or smellier than a 1, it is

just not a 1. In the figure these data

are schematically represented as scattered and independent pieces of wood.

Ordinal numbers, on the other hand, imply a

definite ordering and can be conceptualized as a ladder, however with varying

spaces between rungs. The numbers form a

progression, such as smallest to largest, but there isn’t a consistent

step. For example you might rank

different five different soil types by their relative crop productivity (1=

worst to 5= best) but it doesn’t mean that soil type 5 is exactly five times

more productive than soil type 1.

When

a constant step is applied, Interval

numbers result. For example, a 60o

Fahrenheit spring day is consistently/incrementally warmer than a 30o winter

day. In this case one “degree” forms a

consistent reference step analogous to typical ladder with uniform spacing

between rungs.

A

ratio number introduces yet another

condition—an absolute reference—that is analogous to a consistent footing or

starting point for the ladder, analogous to zero degrees Kelvin temperature

scale that defines when all molecular movement ceases. A final type of numeric data is termed Binary.

In this instance the value range is constrained to just two states, such

as forested/non-forested or suitable/not-suitable.

Figure 3.2-1. Map values are characterized from two broad

perspectives—numeric and geographic—then further refined by specific data

types.

So

what does all of this have to do with analyzing digital maps? The type of number dictates the variety of

analytical procedures that can be applied.

Nominal data, for example, do not support direct mathematical or statistical

analysis. Ordinal data support only a

limited set of statistical procedures, such as maximum and minimum. These two data types are often referred to as

Qualitative Data. Interval and ratio

data, on the other hand, support a full set mathematics and statistics and are

considered Quantitative Data. Binary

maps support special mathematical operators, such as .

The

geographic characteristics of the numbers are less familiar. From this perspective there are two types of

numbers. Choropleth numbers form sharp and unpredictable boundaries in space

such as the values on a road or cover type map.

Isopleth numbers, on the other

hand, form continuous and often predictable gradients in geographic space, such

as the values on an elevation or temperature surface.

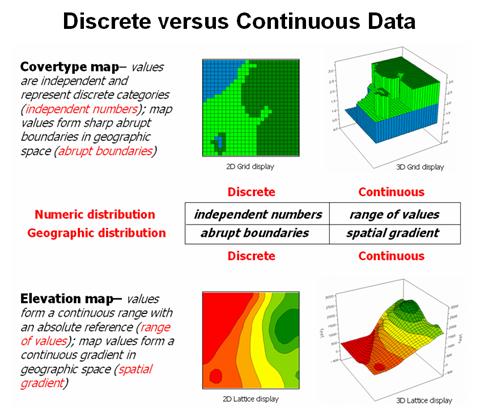

Figure

3.2-2 puts it all together. Discrete

maps identify mapped data with independent numbers (nominal) forming sharp

abrupt boundaries (choropleth), such as a cover type map. Continuous maps contain a range of values

(ratio) that form spatial gradients (isopleth), such as an elevation

surface.

The

clean dichotomy of discrete/continuous is muddled by cross-over data such as

speed limits (ratio) assigned to the features on a road map (choropleth). Understanding the data type, both numerical

and geographic, is critical to applying appropriate analytical procedures and

construction of sound

Figure 3.2-2. Discrete and Continuous map types combine the

numeric and geographic characteristics of mapped data.

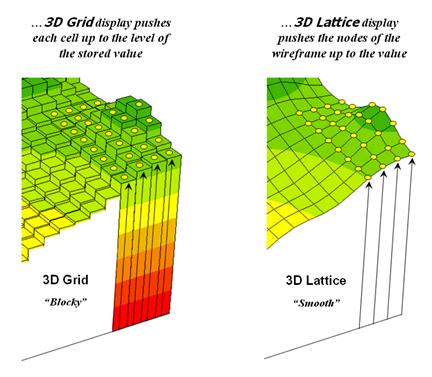

3.3 Grid Data Display

Two

basic approaches can be used to display grid data— grid and lattice. The Grid

display form uses cells to convey surface configuration. The 2D version simply fills each cell with

the contour interval color, while the 3D version pushes up each cell to its relative

height. The Lattice display form uses lines to convey surface

configuration. The contour lines in the

2D version identify the breakpoints for equal intervals of increasing

elevation. In the 3D version the

intersections of the lines are “pushed-up” to the relative height of the

elevation value stored for each location.

Figure

3.3-1 shows how 3D plots are generated.

Placing the viewpoint at different look-angles and distances creates

different perspectives of the reference frame.

For a 3D grid display entire cells are pushed to the relative height of

their map values. The grid cells retain

their projected shape forming blocky extruded columns. The 3D lattice display pushes up each

intersection node to its relative height.

In doing so the four lines connected to it are stretched

proportionally. The result is a smooth

wire-frame that expands and contracts with the rolling hills and valleys.

Figure 3.3-1. 3D display “pushes-up” the grid or lattice

reference frame to the relative height of the stored map values.

Generally

speaking, lattice displays create more pleasing maps and knock-your-socks-off

graphics when you spin and twist the plots.

However, grid displays provide a more honest picture of the underlying

mapped data—a chunky matrix of stored values.

In either case, one must recognize that a 3-D display is not the sole

province of elevation data. Often a

3-dimensional plot of data such as effective proximity is extremely useful in

understanding the subtle differences in distances.

3.4 Visualizing Grid Values

In

a

The

display tools are both a boon and a bane as they require minimal skills to use

but considerable thought and experience to use correctly. The interplay among map projection, scale,

resolution, shading and symbols can dramatically change a map’s appearance and

thereby the information it graphically conveys to the viewer.

While

this is true for the points, lines and areas comprising traditional maps, the

potential for cartographic effects are even more pronounced for contour maps of

surface data. For example, consider the

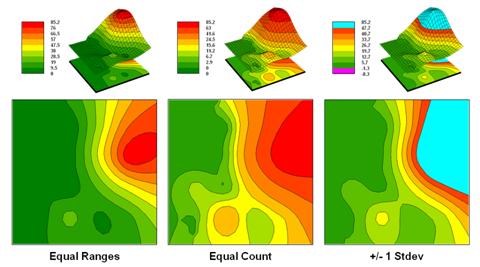

mapped data of animal activity levels from 0.0 to 85.2 animals in a 24-hour

period shown in figure 3.4-1. The map on

the left uses an Equal Ranges display

with contours derived by dividing the data range into nine equal steps. The flat area at the foot of the hill skews

the data distribution toward lower values.

The result is significantly more map locations contained in the lower

contour intervals— first interval from 0 to 9.5 = 39% of the map area. The spatial effect is portrayed by the

radically different areal extent of the contours.

Figure 3.4-1. Comparison of different

2D contour displays using Equal ranges, Equal Count and +/-1 Standard deviation

contouring techniques.

The

middle map in the figure shows the same data displayed as Equal Counts with contours that divide the data range into intervals

that represent equal amounts of the total map area. Notice the unequal spacing of the breakpoints

in the data range but the balanced area of the color bands—the opposite effect

as equal ranges.

The

map on the right depicts yet another procedure for assigning contour

breaks. This approach divides the data

into groups based on the calculated mean and Standard Deviation. The

standard deviation is added to the mean to identify the breakpoint for the

upper contour interval and subtracted to set the lower interval. In this case, the lower breakpoint calculated

is below the actual minimum so no values are assigned to the first interval

(highly skewed data). In statistical

terms the low and high contours are termed the “tails” of the distribution and

locate data values that are outside the bulk of the data— identifying

“unusually” lower and higher values than you normally might expect. The other five contour intervals in the

middle are formed by equal ranges within the lower and upper contours. The result is a map display that highlights

areas of unusually low and high values and shows the bulk of the data as

gradient of increasing values.

The

bottom line of visual analysis is that the same surface data generated

dramatically different map products. All

three displays contain nine intervals but the methods of assigning the

breakpoints to the contours employ radically different approaches that generate

fundamentally different map displays.

So

which display is correct? Actually all

three displays are proper, they just reflect different perspectives of the same

data distribution—a bit of the art in the art and science of

4.0 Spatial

Statistics Techniques

As outlined in section 2.1, Spatial Statistics can be grouped into

two broad camps—surface modeling and spatial data mining. Surface

Modeling involves the translation of discrete point data into a continuous

surface that represents the geographic distribution of the data. Spatial

Data Mining, on the other hand, seeks to uncover numerical relationships

within and among sets of mapped data.

4.1 Surface Modeling

The

conversion of a set of point samples into its implied geographic distribution

involves several considerations—an understanding of the procedures themselves,

the underlying assumptions, techniques for benchmarking the derived map

surfaces and methods for assessing the results and characterizing

accuracy.

4.1.1 Point Samples to

Map Surfaces

Soil

sampling has long been at the core of agricultural research and practice. Traditionally point-sampled data were

analyzed by non-spatial statistics to identify the typical nutrient level

throughout an entire field. Considerable

effort was expended to determine the best single estimate and assess just how

good the average estimate was in typifying a field.

However

non-spatial techniques fail to make use of the geographic patterns inherent in

the data to refine the estimate—the typical level is assumed everywhere the

same within a field. The computed

standard deviation indicates just how good this assumption is—the larger the

standard deviation the less valid is the assumption “…everywhere the same.”

Surface Modeling utilizes the spatial patterns in a

data set to generate localized estimates throughout a field. Conceptually it maps the variance by using

geographic position to help explain the differences in the sample values. In practice, it simply fits a continuous

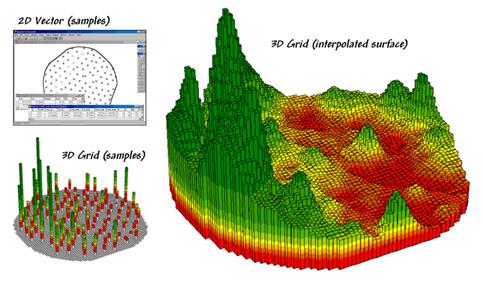

surface to the point data spikes as depicted in figure 4.1.1-1.

Figure 4.1.1-1. Spatial interpolation involves fitting a

continuous surface to sample points.

While

the extension from non-spatial to spatial statistics is quite a theoretical

leap, the practical steps are relatively easy.

The left side of the figure shows 2D and 3D point maps of phosphorous

soil samples collected throughout the field.

This highlights the primary difference from traditional soil

sampling—each sample must be geo-referenced

as it is collected. In addition, the sampling pattern and intensity are

often different than traditional grid sampling to maximize spatial information

within the data collected.

The

surface map on the right side of the figure depicts the continuous spatial

distribution derived from the point data.

Note that the high spikes in the left portion of the field and the

relatively low measurements in the center are translated into the peaks and

valleys of the surface map.

When

mapped, the traditional, non-spatial approach forms a flat plane (average

phosphorous level) aligned within the bright yellow zone. Its “…everywhere the same” assumption fails

to recognize the patterns of larger levels and smaller levels captured in the

surface map of the data’s geographic distribution. A fertilization plan for phosphorous based on

the average level (22ppm) would be ideal for very few locations and be

inappropriate for most of the field as the sample data varies from 5 to 102ppm

phosphorous.

4.1.2 Spatial Autocorrelation

Spatial

Interpolation’s basic concept involves Spatial Autocorrelation,

referring to the degree of similarity among neighboring points (e.g., soil

nutrient samples). If they exhibit a lot similarity, termed spatial dependence, they ought to

derive a good map. If they are spatially independent, then expect a map

of pure, dense gibberish. So how can we measure whether “what happens at

one location depends on what is happening around it?"

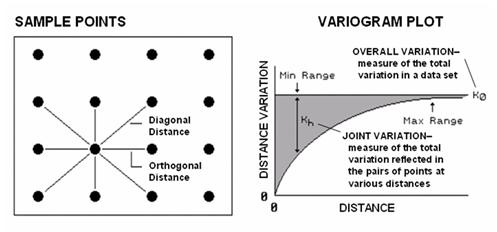

Common

sense leads us to believe more similarity exists among the neighboring soil

samples (lines in the left side of figure 4.1.2-1) than among sample points

farther away. Computing the differences in the values between each sample

point and its closest neighbor provides a test of the assertion as nearby

differences should be less than the overall difference among the values of all

sample locations.

Figure 4.1.2-1. Variogram plot depicts

the relationship between distance and measurement similarity (spatial

autocorrelation).

If

the differences in neighboring values are a lot smaller than the overall

variation, then a high degree of positive spatial dependency is

indicated. If they are about the same or if the neighbors variation is

larger (indicating a rare checkerboard-like condition), then the assumption of

spatial dependence fails. If the dependency test fails, it means an

interpolated soil nutrient map likely is just colorful gibberish.

The

difference test however, is limited as it merely assesses the closest neighbor,

regardless of its distance. A Variogram (right side of figure 4-2)

is a plot of the similarity among values based on the distance between

them. Instead of simply testing whether close things are related, it

shows how the degree of dependency relates to varying distances between

locations. The origin of the plot at 0,0 is a unique case where the distance

between samples is zero and there is no dissimilarity (data variation = 0) indicating that a location is

exactly the same as itself.

As

the distance between points increase, subsets of the data are scrutinized for

their dependency. The shaded portion in the idealized plot shows how

quickly the spatial dependency among points deteriorates with distance.

The maximum range (Max Range) position identifies the distance between points

beyond which the data values are considered independent. This tells us that using data values beyond

this distance for interpolation actually can mess-up the interpolation.

The

minimum range (Min Range) position identifies the smallest distance contained

in the actual data set and is determined by the sampling design used to collect

the data. If a large portion of the shaded area falls below this

distance, it tells you there is insufficient spatial dependency in the data set

to warrant interpolation. If you proceed with the interpolation, a nifty

colorful map will be generated, but likely of questionable accuracy. Worse yet, if the sample data plots as a

straight line or circle, no spatial dependency exists and the map will be of no

value.

Analysis

of the degree of spatial autocorrelation in a set of point samples is mandatory

before spatially interpolating any data. This step is not required to

mechanically perform the analysis as the procedure will always generate a

map. However, it is the initial step in

determining if the map generated is likely to be a good one.

4.1.3

Benchmarking Interpolation

Approaches

For

some, the previous discussion on generating maps from soil samples might have

been too simplistic—enter a few things then click on a data file and, in a few moments you have a soil

nutrient surface. Actually, it is that

easy to create one. The harder part is

figuring out if the map generated makes sense and whether it is something you

ought to use for subsequent analysis and important management decisions.

The

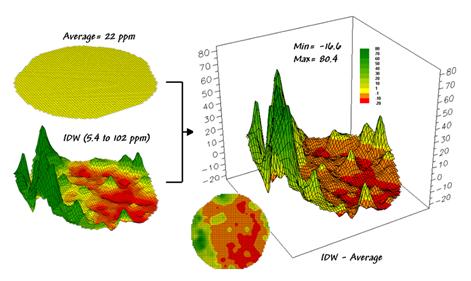

following discussion investigates the relative amounts of spatial information

provided by comparing a whole-field average to interpolated map surfaces

generated from the same data set. The

top-left portion in figure 4.1.3-3 shows the map of the average phosphorous

level in the field. It forms a flat

surface as there isn’t any information about spatial variability in an average

value.

The

non-spatial estimate simply adds up all of the sample measurements and divides

by the number of samples to get 22ppm. Since

the procedure didn’t consider the relative position of the different samples,

it is unable to map the variations in the measurements. The assumption is that the average is

everywhere, plus or minus the standard deviation. But there is no spatial guidance where

phosphorous levels might be higher, or where they might be lower than the

average.

Figure 4.1.3-1. Spatial comparison of a whole-field average

and an IDW interpolated map.

The

spatially based estimates are shown in the interpolated map surface below the

average plane. As described in the

previous section 4.1.2, spatial interpolation looks at the relative positioning

of the soil samples as well as their measure phosphorous levels. In this instance the big bumps were

influenced by high measurements in that vicinity while the low areas responded

to surrounding low values.

The

map surface in the right portion of figure 4.1.3-1 compares the two maps simply

by subtracting them. The color ramp was

chosen to emphasize the differences between the whole-field average estimates

and the interpolated ones. The center

yellow band indicates the average level while the progression of green tones

locates areas where the interpolated map estimated that there was more

phosphorous than the whole field average.

The higher locations identify where the average value is less than the

interpolated ones. The lower locations

identify the opposite condition where the average value is more than the

interpolated ones. Note the dramatic

differences between the two maps.

Now

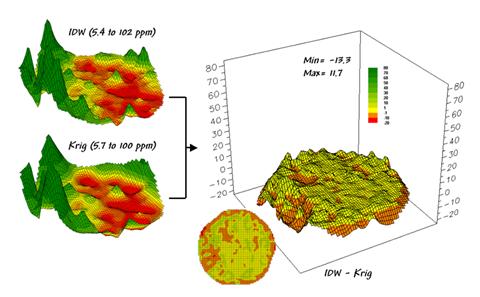

turn your attention to figure 4.1.3-2 that compares maps derived by two

different interpolation techniques—IDW (inverse distance-weighted) and Krig. Note the

similarity in the peaks and valleys of the two surfaces. While subtle differences are visible the

general trends in the spatial distribution of the data are identical.

The

difference map on the right confirms the coincident trends. The broad band of yellow identifies areas

that are +/- 1 ppm.

The brown color identifies areas that are within 10 ppm

with the IDW surface estimates a bit more than the Krig

ones. Applying the same assumption about

+/- 10 ppm difference being negligible in a

fertilization program the maps are effectively identical.

Figure 4.1.3-2. Spatial comparison of IDW and Krig interpolated maps.

So

what’s the bottom line? That there often

are substantial differences between a whole field average and any interpolated

surface. It suggests that finding the

best interpolation technique isn’t as important as using an interpolated

surface over the whole field average.

This general observation holds most mapped data exhibiting spatial

autocorrelation.

4.1.4 Assessing Interpolation

Results

The

previous discussion compared the assumption of the field average with map

surfaces generated by two different interpolation techniques for phosphorous

levels throughout a field. While there

was considerable differences between the average and the derived surfaces (from

-20 to +80ppm), there was relatively little difference between the two surfaces

(+/- 10ppm).

But

which surface best characterizes the spatial distribution of the sampled

data? The answer to this question lies

in Residual Analysis—a

technique that investigates the differences between estimated and measured

values throughout a field. It is common

sense that one should not simply accept an interpolated map without assessing

its accuracy. Ideally, one designs an

appropriate sampling pattern and then randomly locates a number of test points

to evaluate interpolation performance.

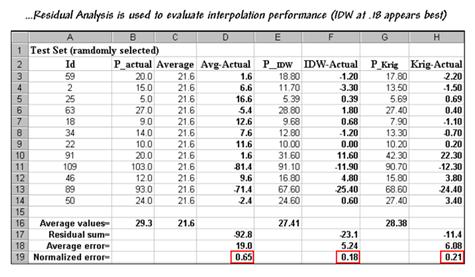

So

which surface, IDW or Krig, did a better job in

estimating the measured phosphorous levels for a test set of measurements? The table in figure 4.1.4-1 reports the

results for twelve randomly positioned test samples. The first column identifies the sample ID and

the second column reports the actual measured value for that location.

Figure 4.1.4-1. A residual analysis table identifies the

relative performance of average, IDW and Krig

estimates.

Column

C simply depicts estimating the whole-field average (21.6) at each of the test

locations. Column D computes the difference

of the estimated value minus actual measured value for the test set—formally

termed the residual. For example,

the first test point (ID#59) estimated the average of 21.6 but was actually

measured as 20.0, so the residual is 1.6 (21.6-20.0= 1.6ppm) …very close. However, test point #109 is way off

(21.6-103.0= -81.4ppm) …nearly 400% under-estimate error.

The

residuals for the IDW and Krig maps are similarly

calculated to form columns F and H, respectively. First note that the residuals for the whole-field

average are generally larger than either those for the IDW or Krig estimates. Next

note that the residual patterns between the IDW and Krig

are very similar—when one is way off, so is the other and usually by about the

same amount. A notable exception is for

test point #91 where Krig dramatically

over-estimates.

The

rows at the bottom of the table summarize the residual analysis results. The Residual

sum row characterizes any bias in the estimates—a negative value indicates

a tendency to underestimate with the magnitude of the value indicating how

much. The –92.8 value for the

whole-field average indicates a relatively strong bias to underestimate.

The

Average error row reports how

typically far off the estimates were.

The 19.0ppm average error for the whole-field average is three times

worse than Krig’s estimated error (6.08) and nearly

four times worse than IDW’s (5.24).

Comparing

the figures to the assumption that +/-10ppm is negligible in a fertilization

program it is readily apparent that the whole-field estimate is inappropriate

to use and that the accuracy differences between IDW and Krig

are minor.

The

Normalized error row simply

calculates the average error as a proportion of the average value for the test

set of samples (5.24/29.3= .18 for IDW).

This index is the most useful as it enables the comparison of the

relative map accuracies between different maps.

Generally speaking, maps with normalized errors of more than .30 are

suspect and one might not want to make important decisions using them.

The

bottom line is that Residual Analysis is an important consideration when

spatially interpolating data. Without an

understanding of the relative accuracy and interpolation error of the base

maps, one can’t be sure of any modeling results using the data. The investment in a few extra sampling points

for testing and residual analysis of these data provides a sound foundation for

site-specific management. Without it,

the process can become one of blind faith and wishful thinking.

4.2 Spatial

Data Mining

Spatial data mining involves procedures for uncovering

numerical relationships within and among sets of mapped data. The underlying concept links a map’s

geographic distribution to its corresponding numeric distribution through the

coordinates and map values stored at each location. This ‘data space’ and ‘geographic space’

linkage provides a framework for calculating map similarity, identifying data

zones, mapping data clusters, deriving prediction maps and refining analysis

techniques.

4.2.1 Calculating Map

Similarity

While visual analysis of

a set of maps might identify broad relationships, it takes quantitative map

analysis to handle a detailed scrutiny.

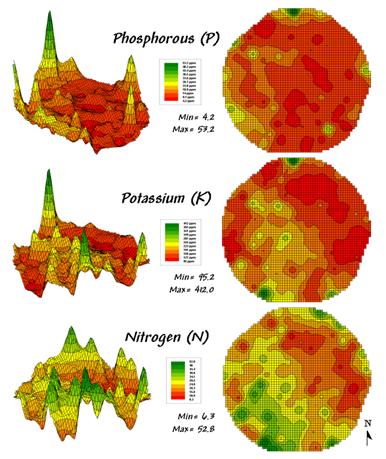

Consider the three maps shown in figure 4.2.1-1— what areas identify

similar patterns? If you focus your attention

on a location in the lower right portion how similar is the data pattern to all

of the other locations in the field?

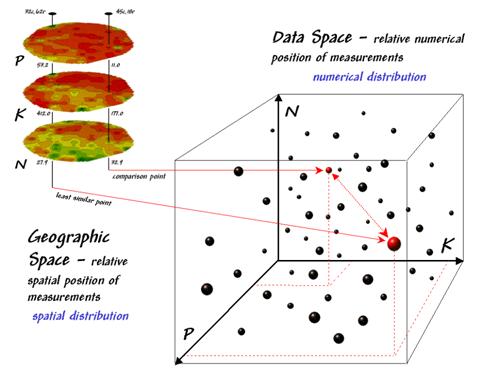

Figure

4.2.1-1. Map surfaces identifying the

spatial distribution of P,K and N throughout a field.

The answers to these

questions are much too complex for visual analysis and certainly beyond the

geo-query and display procedures of standard desktop mapping packages. While the data in the example shows the

relative amounts of phosphorous, potassium and nitrogen throughout a field, it

could as easily be demographic data representing income, education and property

values; or sales data tracking three different products; or public health maps

representing different disease incidences; or crime statistics representing

different types of felonies or misdemeanors.

Regardless of the data

and application arena, a multivariate procedure for assessing similarity often

is used to analyze the relationships. In

visual analysis you move your eye among the maps to summarize the color

assignments at different locations. The

difficulty in this approach is two-fold— remembering the color patterns and

calculating the difference. The map

analysis procedure does the same thing except it uses map values in place of

the colors. In addition, the computer

doesn’t tire as easily and completes the comparison for all of the locations

throughout the map window (3289 in this example) in a couple of seconds.

The upper-left portion of

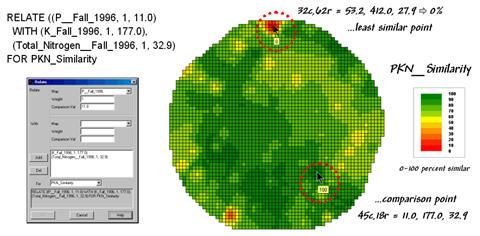

figure 4.2.1-2 illustrates capturing the data patterns of two locations for

comparison. The “data spear” at map

location 45column, 18row identifies that the P-level as 11.0ppm, the K-level as

177.0 and N-level as 32.9. This step is

analogous to your eye noting a color pattern of dark-red, dark-orange and

light-green. The other location for

comparison (32c, 62r) has a data pattern of P= 53.2, K= 412.0 and N= 27.9; or

as your eye sees it, a color pattern of dark-green, dark-green and yellow.

Figure 4.2.1-2. Geographic space and

data space can be conceptually linked.

The right side of the

figure conceptually depicts how the computer calculates a similarity value for

the two response patterns. The

realization that mapped data can be expressed in both geographic space and data

space is a key element to understanding the procedure.

Geographic space

uses coordinates, such latitude and longitude, to locate things in the real

world—such as the southeast and extreme north points identified in the

example. The geographic expression of

the complete set of measurements depicts their spatial distribution in familiar

map form.

Data space, on the other hand, is a

bit less familiar but can be conceptualized as a box with balls floating within

it. In the example, the three axes

defining the extent of the box correspond to the P, K and N levels measured in

the field. The floating balls represent

grid cells defining the geographic space—one for each grid cell. The coordinates locating the floating balls

extend from the data axes—11.0, 177.0 and 32.9 for the comparison point. The other point has considerably higher

values in P and K with slightly lower N (53.2, 412.0, 27.9) so it plots at a

different location in data space.

The bottom line is that

the position of any point in data space identifies its numerical pattern—low,

low, low is in the back-left corner, while high, high, high is in the upper-right

corner. Points that plot in data space

close to each other are similar; those that plot farther away are less

similar.

In the example, the

floating ball in the foreground is the farthest one (least similar) from the

comparison point’s data pattern. This

distance becomes the reference for ‘most different’ and sets the bottom value

of the similarity scale (0%). A point

with an identical data pattern plots at exactly the same position in data space

resulting in a data distance of 0 that equates to the highest similarity value

(100%).

The similarity map shown

in figure 4.2.1-3 applies the similarity scale to the data distances calculated

between the comparison point and all of the other points in data space. The green tones indicate field locations with

fairly similar P, K and N levels. The

red tones indicate dissimilar areas. It

is interesting to note that most of the very similar locations are in the left

portion of the field.

Figure 4.2.1-3. A similarity map identifies how related the

data patterns are for all other locations to the pattern of a given comparison

location.

Map Similarity can

be an invaluable tool for investigating spatial patterns in any complex set of

mapped data. Humans are unable to

conceptualize more than three variables (the data space box); however a

similarity index can handle any number of input maps. In addition, the different layers can be

weighted to reflect relative importance in determining overall similarity.

In effect, a similarity

map replaces a lot of laser-pointer waving and subjective suggestions of how

similar/dissimilar locations are with a concrete, quantitative measurement for

each map location.

4.2.2 Identifying Data Zones

The preceding section introduced the concept of ‘data distance’ as a means to

measure similarity within a map. One

simply mouse-clicks a location and all of the other locations are assigned a

similarity value from 0 (zero percent similar) to 100 (identical) based on a

set of specified maps. The statistic

replaces difficult visual interpretation of map displays with an exact

quantitative measure at each location.

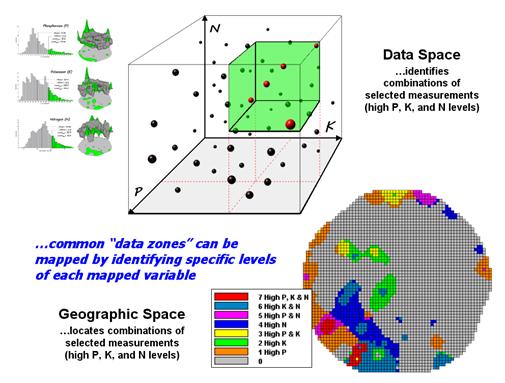

Figure 4.2.2-1 depicts

level slicing for areas that are unusually high in P, K and N (nitrogen). In this instance the data pattern coincidence

is a box in 3-dimensional data space.

Figure 4.2.2-1. Level-slice

classification can be used to map sub-groups of similar data patterns.

A mathematical trick was

employed to get the map solution shown in the figure. On the individual maps, high areas were set

to P=1, K= 2 and N=4, then the maps were added together. The result is a range of coincidence values

from zero (0+0+0= 0; gray= no high areas) to seven (1+2+4= 7; red= high P, high

K, high N). The map values between these

extremes identify the individual map layers having high measurements. For example, the yellow areas with the value

3 have high P and K but not N (1+2+0= 3).

If four or more maps are combined, the areas of interest are assigned

increasing binary progression values (…8, 16, 32, etc)—the sum will always

uniquely identify the combinations.

While Level Slicing

is not a sophisticated classifier, it illustrates the useful link between data

space and geographic space. This

fundamental concept forms the basis for most geo-statistical analysis including

map clustering and regression.

4.2.3 Mapping Data Clusters

While both Map Similarity

and Level Slicing techniques are useful in examining spatial relationships,

they require the user to specify data analysis parameters. But what if you don’t know what level slice

intervals to use or which locations in the field warrant map similarity

investigation? Can the computer on its

own identify groups of similar data? How

would such a classification work? How

well would it work?

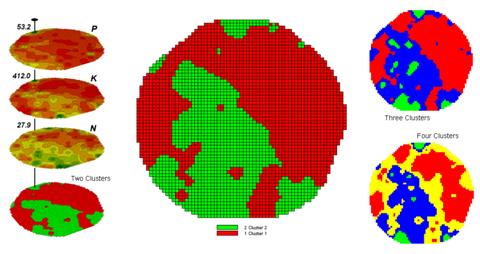

Figure 4.2.3-1. Map clustering

identifies inherent groupings of data patterns in geographic space.

Figure 4.2.3-1 shows some

examples derived from Map Clustering.

The map stack on the left shows the input maps used for the cluster

analysis. The maps are the same P, K,

and N maps identifying phosphorous, potassium and nitrogen levels used in the

previous discussions in this section.

However, keep in mind that the input maps could be crime, pollution or

sales data—any set of application related data.

Clustering simply looks at the numerical pattern at each map location

and sorts them into discrete groups regardless of the nature of the data or its

application.

The map in the center of

the figure shows the results of classifying the P, K and N map stack into two

clusters. The data pattern for each cell

location is used to partition the field into two groups that meet the criteria

as being 1) as different as possible between groups and 2) as similar

as possible within a group.

The two smaller maps at

the right show the division of the data set into three and four clusters. In all three of the cluster maps red is

assigned to the cluster with relatively low responses and green to the one with

relatively high responses. Note the

encroachment on these marginal groups by the added clusters that are formed by

data patterns at the boundaries.

The mechanics of

generating cluster maps are quite simple.

Simply specify the input maps and the number of clusters you want then

miraculously a map appears with discrete data groupings. So how is this miracle performed? What happens inside cluster’s black box?

Figure 4.2.3-2. Data patterns for map

locations are depicted as floating balls in data space.

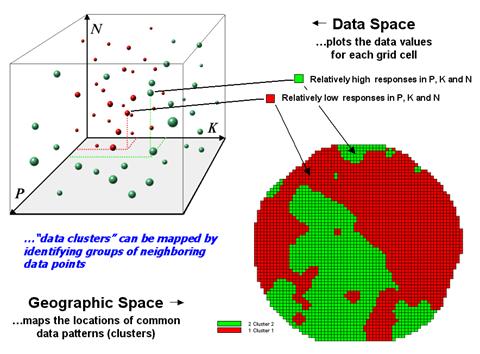

The schematic in figure

4.2.3-2 depicts the process. The

floating balls identify the data patterns for each map location (geographic

space) plotted against the P, K and N axes (data space). For example, the large ball appearing closest

to you depicts a location with high values on all three input maps. The tiny ball in the opposite corner (near

the plot origin) depicts a map location with small map values. It seems sensible that these two extreme

responses would belong to different data groupings.

While the specific algorithm

used in clustering is beyond the scope of this chapter, it suffices to note

that ‘data distances’ between the floating balls are used to identify cluster

membership—groups of floating balls that are relatively far from other groups

and relatively close to each other form separate data clusters. In this example, the red balls identify

relatively low responses while green ones have relatively high responses. The geographic pattern of the classification

is shown in the map in the lower right portion of the figure.

Identifying groups of

neighboring data points to form clusters can be tricky business. Ideally, the clusters will form distinct

clouds in data space. But that rarely

happens and the clustering technique has to enforce decision rules that slice a

boundary between nearly identical responses.

Also, extended techniques can be used to impose weighted boundaries

based on data trends or expert knowledge.

Treatment of categorical data and leveraging spatial autocorrelation are

other considerations.

So how do know if the

clustering results are acceptable? Most

statisticians would respond, “…you can’t tell for sure.” While there are some elaborate procedures

focusing on the cluster assignments at the boundaries, the most frequently used

benchmarks use standard statistical indices.

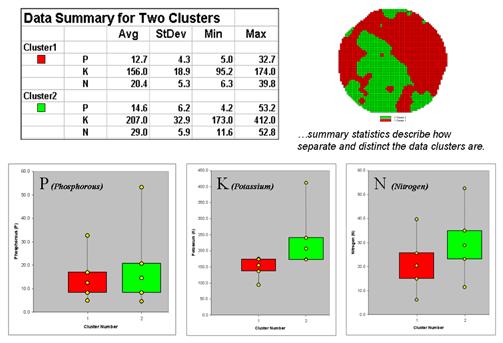

Figure 4.2.3-3. Clustering results can

be roughly evaluated using basic statistics.

Figure 4.2.3-3 shows the

performance table and box-and-whisker plots for the map containing two clusters. The average, standard deviation, minimum and

maximum values within each cluster are calculated. Ideally the averages would be radically

different and the standard deviations small—large difference between groups and

small differences within groups.

Box-and-whisker plots

enable a visualize assessment of the differences. The box is centered on the average (position)

and extends above and below one standard deviation (width) with the whiskers

drawn to the minimum and maximum values to provide a visual sense of the data

range. When the diagrams for the two

clusters overlap, as they do for the phosphorous responses, it suggests that

the clusters are not distinct along this data axis.

The separation between

the boxes for the K and N axes suggests greater distinction between the

clusters. Given the results a practical

user would likely accept the classification results. And statisticians hopefully will accept in

advance apologies for such a conceptual and terse treatment of a complex

spatial statistics topic.

4.2.4 Deriving Prediction

Maps

For years non-spatial statistics has been predicting things by analyzing a

sample set of data for a numerical relationship (equation) then applying the

relationship to another set of data. The

drawbacks are that the non-approach doesn’t account for geographic

relationships and the result is just a table of numbers. Extending predictive analysis to mapped data

seems logical; after all, maps are just organized sets of numbers. And

To illustrate the data

mining procedure, the approach can be applied to the same field that has been

the focus for the previous discussion.

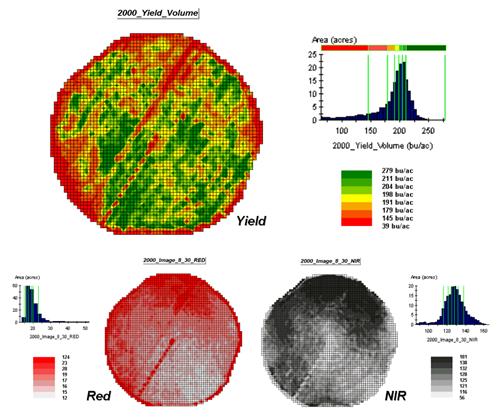

The top portion of figure 4.2.4-1 shows the yield pattern of corn for

the field varying from a low of 39 bushels per acre (red) to a high of 279

(green). The corn yield map is termed

the dependent map variable and identifies the phenomena to be predicted.

The independent map

variables depicted in the bottom portion of the figure are used to uncover

the spatial relationship used for prediction— prediction equation. In this instance, digital aerial imagery will

be used to explain the corn yield patterns.

The map on the left indicates the relative reflectance of red light off

the plant canopy while the map on the right shows the near-infrared response (a

form of light just beyond what we can see).

Figure 4.2.4-1. The corn yield map

(top) identifies the pattern to predict; the red and near-infrared maps

(bottom) are used to build the spatial relationship.

While it is difficult to visually

assess the subtle relationships between corn yield and the red and

near-infrared images, the computer “sees” the relationship quantitatively. Each grid location in the analysis frame has

a value for each of the map layers— 3,287 values defining each geo-registered

map covering the 189-acre field.

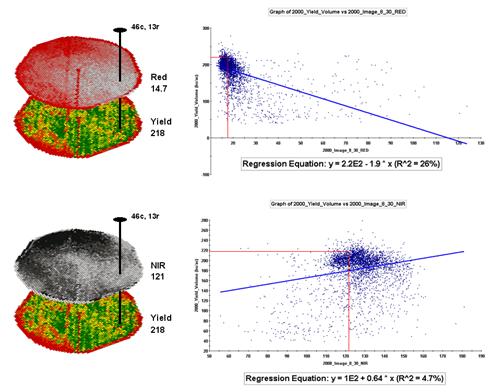

For example, top portion

of figure 4.2.4-2 identifies that the example location has a ‘joint’ condition

of red band equals 14.7 and yield equals 218.

The lines parallel to axes in the scatter plot on the right identifies

the precise position of the pair of map values—X= 14.7 and Y= 218. Similarly, the near-infrared and yield values

for the same location are shown in the bottom portion of the figure.

The set of dots in both

of the scatter plots represents all of the data pairs for each grid

location. The slanted lines through the

dots represent the prediction equations derived through regression

analysis. While the mathematics is a bit

complex, the effect is to identify a line that ‘best fits the data’— just as

many data points above as below the regression line.

Figure 4.2.4-2. The joint conditions

for the spectral response and corn yield maps are summarized in the scatter

plots shown on the right.

In a sense, the line

identifies the average yield for each step along the X-axis for the red and

near-infrared bands and a reasonable guess of the corn yield for each level of

spectral response. That’s how a

regression prediction is used— a value for the red band (or near-infrared band)

in another field is entered and the equation for the line calculates a

predicted corn yield. Repeating the

calculation for all of the locations in the field generates a prediction map of

yield from remotely sensed data.

A major problem is that

the R-squared statistic summarizing the residuals for both of the prediction

equations is fairly small (R^2= 26% and 4.7% respectively) which suggests that

the prediction lines do not fit the data very well. One way to improve the predictive model might

be to combine the information in both of the images. The Normalized Density Vegetation Index

(NDVI) does just that by calculating a new value that indicates plant density

and vigor— NDVI= ((NIR – Red) / (NIR + Red)).

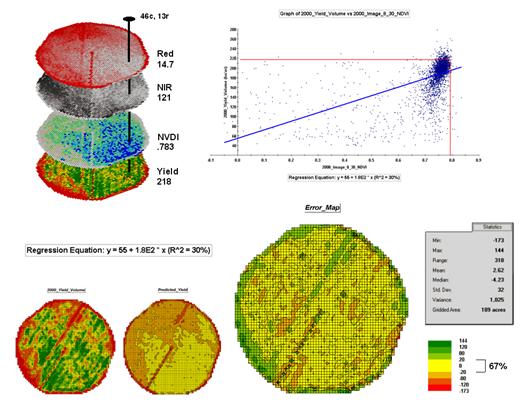

Figure 4.2.4-3 shows the

process for calculating NDVI for the sample grid location— ((121-14.7) / (121 +

14.7)) = 106.3 / 135.7 = .783. The scatter

plot on the right shows the yield versus NDVI plot and regression line for all

of the field locations. Note that the

R^2 value is a higher at 30% indicating that the combined index is a better

predictor of yield.

Figure 4.2.4-3. The red and NIR maps

are combined for NDVI value that is a better predictor of yield.

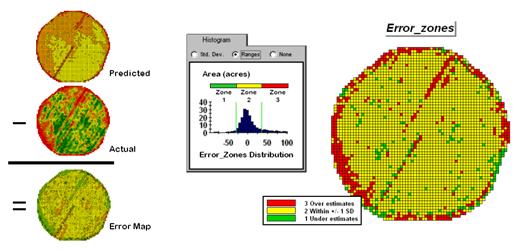

The bottom portion of the

figure evaluates the prediction equation’s performance over the field. The two smaller maps show the actual yield

(left) and predicted yield (right). As

you would expect the prediction map doesn’t contain the extreme high and low

values actually measured.

The larger map on the

right calculates the error of the estimates by simply subtracting the actual

measurement from the predicted value at each map location. The error map suggests that overall the yield

estimates are not too bad— average error is a 2.62 bu/ac

over estimate and 67% of the field is within +/- 20 bu/ac. Also

note the geographic pattern of the errors with most of the over estimates

occurring along the edge of the field, while most of the under

estimates are scattered along NE-SW strips.

Evaluating a prediction

equation on the data that generated it is not validation; however the procedure

provides at least some empirical verification of the technique. It suggests hope that with some refinement

the prediction model might be useful in predicting yield from remotely sensed

data well before harvest.

4.2.5 Stratifying Maps for

Better Predictions

The preceding section described a procedure for predictive analysis of mapped

data. While the underlying theory,

concerns and considerations can be quite complex, the procedure itself is quite

simple. The grid-based processing

preconditions the maps so each location (grid cell) contains the appropriate

data. The ‘shishkebab’

of numbers for each location within a stack of maps are analyzed for a

prediction equation that summarizes the relationships.

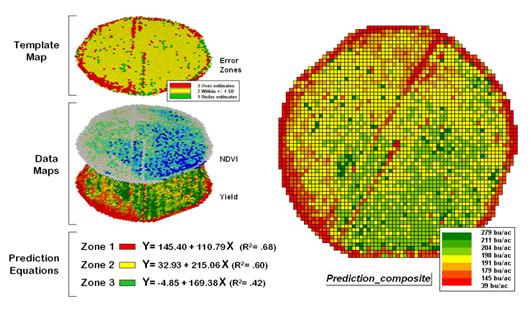

The left side of figure

4.2.5-1 shows the evaluation procedure for regression analysis and error map

used to relate a map of NDVI to a map of corn yield for a farmer’s field. One way to improve the predictions is to

stratify the data set by breaking it into groups of similar

characteristics. The idea is that set of

prediction equations tailored to each stratum will result in better predictions

than a single equation for an entire area.

The technique is commonly used in non-spatial statistics where a data

set might be grouped by age, income, and/or education prior to analysis. Additional factors for stratifying, such as

neighboring conditions, data clustering and/or proximity can be used as well.

Figure 4.2.5-1. A project area can be

stratified based on prediction errors.

While there are numerous

alternatives for stratifying, subdividing the error map will serve to

illustrate the conceptual approach. The

histogram in the center of figure 4.2.5-1 shows the distribution of values on

the Error Map. The vertical bars

identify the breakpoints at +/- 1 standard deviation and divide the map values

into three strata—zone 1 of unusually high under-estimates (red), zone 2 of

typical error (yellow) and zone 3 of unusually high over-estimates

(green). The map on the right of the figure

maps the three strata throughout the field.

The rationale behind the

stratification is that the whole-field prediction equation works fairly well

for zone 2 but not so well for zones 1 and 3.

The assumption is that conditions within zone 1 make the equation under

estimate while conditions within zone 3 cause it to over

estimate. If the assumption holds

one would expect a tailored equation for each zone would be better at

predicting corn yield than a single overall equation.

Figure 4.2.5-2 summarizes

the results of deriving and applying a set of three prediction equations. The left side of the figure illustrates the

procedure. The Error Zones map is used

as a template to identify the NDVI and Yield values used to calculate three

separate prediction equations. For each

map location, the algorithm first checks the value on the Error Zones map then

sends the data to the appropriate group for analysis. Once the data has been grouped a regression

equation is generated for each zone.

Figure 4.2.5-2. After stratification,

prediction equations can be derived for each element.

The R^2 statistic for all

three equations (.68, .60, and .42 respectively) suggests that the equations

fit the data fairly well and ought to be good predictors. The right side of figure 4.2.5-2 shows a

composite prediction map generated by applying the equations to the NDVI data

respecting the zones identified on the template map.

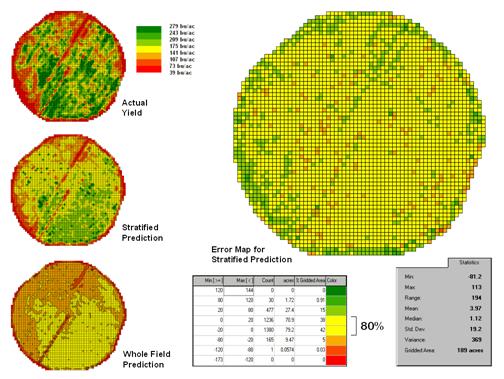

The left side of figure

4.2.5-3 provides a visual comparison between the actual yield and predicted

maps. The stratified prediction shows

detailed estimates that more closely align with the actual yield pattern than

the ‘whole-field’ derived prediction map using a single equation. The error map for the stratified prediction

shows that eighty percent of the estimates are within +/- 20 bushels per

acre. The average error is only 4 bu/ac and having maximum

under/over estimates of –81.2 and 113, respectively. All in all, fairly good yield estimates based

on a remote sensing data collected nearly a month before the field was

harvested.

A couple of things should

be noted from this example of spatial data mining. First, that there is a myriad of other ways

to stratify mapped data—1) Geographic Zones, such as proximity to the field

edge; 2) Dependent Map Zones, such as areas of low, medium and high yield; 3)

Data Zones, such as areas of similar soil nutrient levels; and 4) Correlated

Map Zones, such as micro terrain features identifying small ridges and

depressions. The process of identifying

useful and consistent stratification schemes is an emerging research frontier

in the spatial sciences.

Second, the error map is

a key part in evaluating and refining prediction equations. This point is particularly important if the

equations are to be extended in space and time.

The technique of using the same data set to develop and evaluate the

prediction equations isn’t always adequate.

The results need to be tried at other locations and dates to verify

performance. While spatial data mining

methodology might be at hand, good science is imperative.

Figure 4.2.5-3. Stratified and

whole-field predictions can be compared using statistical techniques.

Finally, one needs to

recognize that spatial data mining is not restricted to precision agriculture

but has potential for analyzing relationships within almost any set of mapped

data. For example, prediction models can

be developed for geo-coded sales from demographic data or timber production

estimates from soil/terrain patterns.

The bottom line is that maps are increasingly seen as organized sets of

data that can be map-ematically analyzed for spatial

relationships— we have only scratched the surface.

5.0 Spatial

Analysis Techniques

While

map analysis tools might at first seem uncomfortable they simply are extensions

of traditional analysis procedures brought on by the digital nature of modern

maps. The previous section 6.0 described

a conceptual framework and some example procedures that extend traditional

statistics to a spatial statistics that investigates numerical relationships

within and among mapped data layers.

Similarly,

a mathematical framework can be used to organize spatial analysis

operations. Like basic math, this

approach uses sequential processing of mathematical operations to perform a

wide variety of complex map analyses. By

controlling the order that the operations are executed, and using a common

database to store the intermediate results, a mathematical-like processing structure

is developed.

This

‘map algebra’ is similar to traditional algebra where basic operations, such as

addition, subtraction and exponentiation, are logically sequenced for specific

variables to form equations—however, in map algebra the variables represent

entire maps consisting of thousands of individual grid values. Most of traditional mathematical

capabilities, plus extensive set of advanced map processing operations,

comprise the map analysis toolbox.

As

with matrix algebra (a mathematics operating on sets of numbers) new operations

emerge that are based on the nature of the data. Matrix algebra’s transposition, inversion and

diagonalization are examples of the extended set of

techniques in matrix algebra.

In

grid-based map analysis, the spatial coincidence and juxtaposition of values

among and within maps create new analytical operation, such as coincidence,

proximity, visual exposure and optimal routes.

These operators are accessed through general purpose map analysis

software available in most

There

are two fundamental conditions required by any spatial analysis package—a consistent data structure and an iterative processing environment. The earlier section 3.0 described the

characteristics of the grid-based data structure by introducing the concepts of

an analysis frame, map stack, data types and display forms. The traditional discrete set of map features

(points, lines and polygons) where extended to map surfaces that characterize

geographic space as a continuum of uniformly-spaced grid cells. This structure forms a framework for the map-ematics

underlying

Figure 5.0-1. An iterative processing environment,

analogous to basic math, is used to derive new map variables.

The

second condition of map analysis provides an iterative processing environment

by logically sequencing map analysis operations. This involves:

- retrieval of one or more map layers from the

database,

- processing that data as specified by the user,

- creation of a new map containing the

processing results, and

- storage of the new map for subsequent

processing.

Each

new map derived as processing continues aligns with the analysis frame so it is

automatically geo-registered to the other maps in the database. The values comprising the derived maps are a

function of the processing specified for the input maps. This cyclical processing provides an

extremely flexible structure similar to “evaluating nested parentheses” in

traditional math. Within this structure,

one first defines the values for each variable and then solves the equation by

performing the mathematical operations on those numbers in the order prescribed

by the equation.

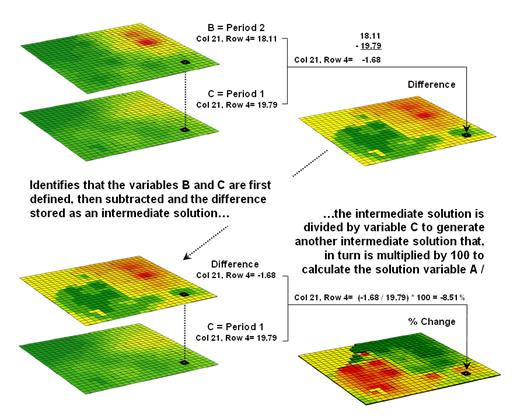

This

same basic mathematical structure provides the framework for computer-assisted

map analysis. The only difference is

that the variables are represented by mapped data composed of thousands of

organized values. Figure 5.0-1 shows a

solution for calculating the percent change in animal activity.

The

processing steps shown in the figure are identical to the algebraic formula for

percent change except the calculations are performed for each grid cell in the study

area and the result is a map that identifies the percent change at each

location. Map analysis identifies what

kind of change (thematic attribute) occurred where (spatial attribute). The characterization of “what and where”

provides information needed for continued

5.1 Spatial Analysis

Framework

Within

this iterative processing structure, four fundamental classes of map analysis

operations can be identified. These

include:

- Reclassifying Maps – involving the reassignment of the

values of an existing map as a function of its initial value, position, size,

shape or contiguity of the spatial configuration associated with each map

category.

- Overlaying Maps – resulting in the creation of a new

map where the value assigned to every location is computed as a function of the

independent values associated with that location on two or more maps.

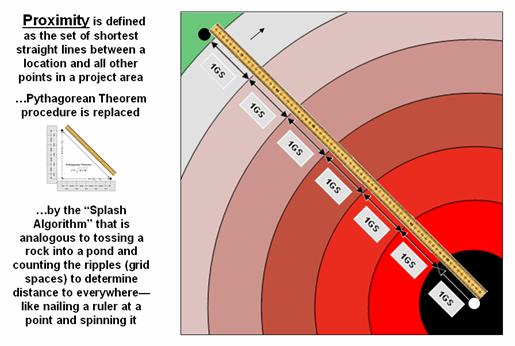

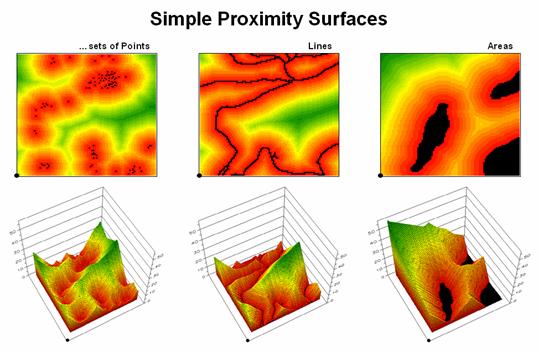

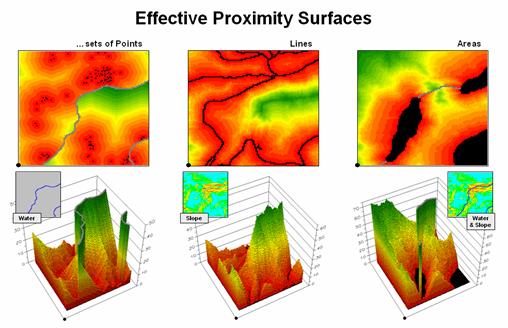

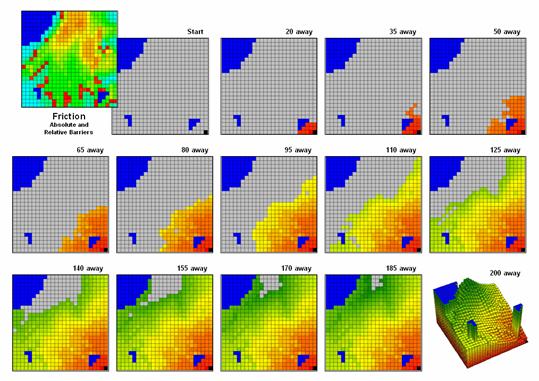

- Measuring Distance and Connectivity – involving the creation of a new map

expressing the distance and route between locations as straight-line length

(simple proximity) or as a function of absolute or relative barriers (effective

proximity).

- Summarizing Neighbors – resulting in the creation of a new

map based on the consideration of values within the general vicinity of target

locations.

Reclassification

operations merely repackage existing information on a single map. Overlay operations, on the other hand,

involve two or more maps and result in the delineation of new boundaries. Distance and connectivity operations are more

advanced techniques that generate entirely new information by characterizing

the relative positioning of map features.

Neighborhood operations summarize the conditions occurring in the

general vicinity of a location.

The

reclassifying and overlaying operations based on point processing are the

backbone of current

The

mathematical structure and classification scheme of Reclassify, Overlay, Distance

and Neighbors form a conceptual framework that is easily adapted to modeling

spatial relationships in both physical and abstract systems. A major advantage is flexibility. For example, a model for siting

a new highway could be developed as a series of processing steps. The analysis likely would consider economic

and social concerns (e.g., proximity to high housing density, visual exposure

to houses), as well as purely engineering ones (e.g., steep slopes, water

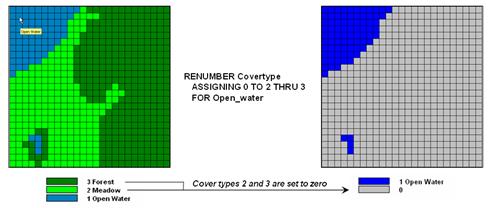

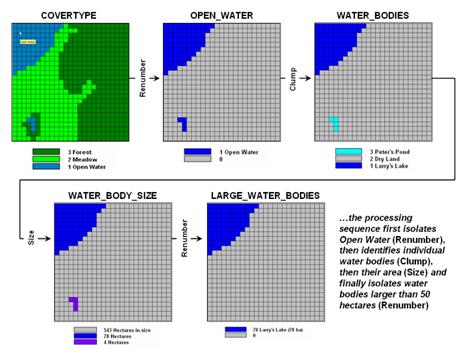

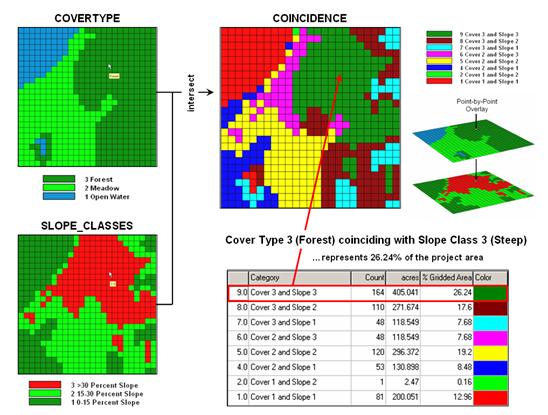

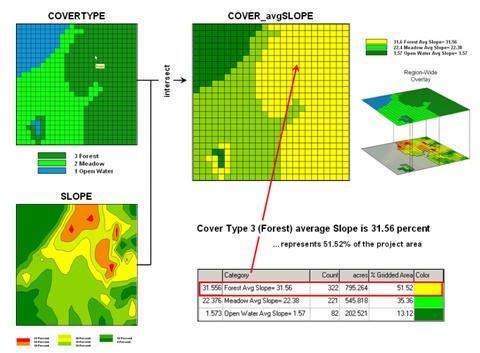

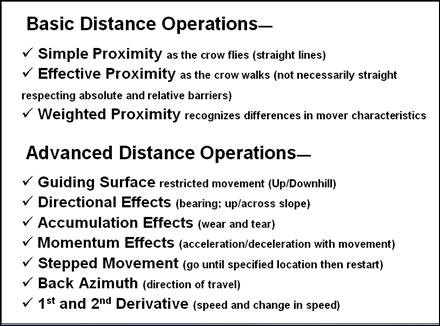

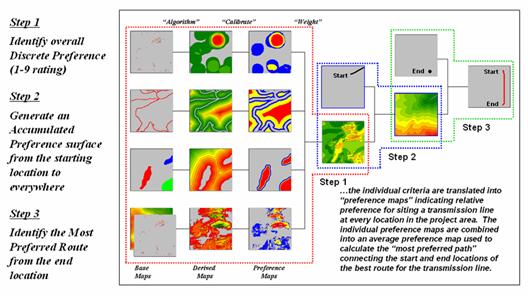

bodies). The combined expression of both